Does Artificial Intelligence Think?

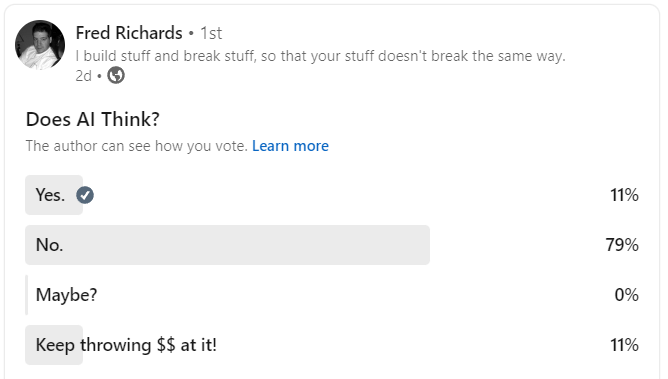

A few days ago, over on LinkedIn, a friend, Fred Richards, posted a poll question. Does AI think? 11% of people said "Yes" while the "No" camp took home 79% of the vote. "Maybe" stood alone with 0% of the vote while the remaining 11% said, "Keep throwing $$ at it!"

Granted, the poll was all in fun. At least, I assume it was. Nevertheless, I gave my answer (I was one of the 11% yes votes), but with an explanation. First, I explained, we need to argue about what intelligence means, so let me give it a try.

Intelligence is the ability to use information in order to accomplish tasks. The more intelligence, the more complex the problems/tasks that can be solved/achieved. In that respect, almost every form of life is intelligent to varying degrees. So is a calculator, or my Roomba. Does having intelligence imply thinking? That's an open question. If we define thinking as sifting through information in an intelligent way to choose a specific solution to a problem, then yes, AI does think. Is AI conscious? Well, that's a whole other topic than either intelligence or thinking.

And that's where I left my answer, but the more I thought about it, the more I wanted to say, so I'm saying it here.

You'd be hard pressed to find a universal definition of intelligence, or what constitutes intelligence. Even today, there are several prevailing schools of thought on the subject. One of my favorites is Howard Gardner's multiple intelligences theory, which suggests that there are eight distinct types of intelligence, linguistic, logical-mathematical, spatial, musical, bodily-kinesthetic, interpersonal, intrapersonal, and naturalistic. It's one of my favourites if only because of its heroic effort to include anything a human being might be capable of as "intelligence".

It also goes a long way, for now at least, to excluding machines, whether robot or computer, from participating in the intelligence game.

For machines, some people will suggest the Turing test, by which they usually refer to the thought experiment proposed by computer pioneer, Alan Turing. The actual Turing test is almost certainly irrelevant since most modern chatbots can pass it easily, certainly when chatting with "the average person", whoever this average person might be. Now, there was a prize for machine conversational intelligence called the "Loebner Prize" designed to be a Turing test with a cash prize. The Loebner Prise, which, as far as I know, hasn't been handed out since 2020 meaning it's probably dead and gone.

A friend directed me to an article in New York Magazine, titled, "You Are Not A Parrot", by Elizabeth Weil, writing about linguist, Emily M. Bender's version of the Turing test. In it, she considers a hypothetical "hyperintelligent deep-sea octopus" that stumbles upon a deep-sea communication cable and somehow manages to listen in on the conversations of two people on separate islands. Eventually, the octopus learns to communicate convincingly (a la ChatGPT) but is eventually exposed as a fraud when it can't answer questions from one of the islanders about defending itself from a bear attack. It tries to answer, but the answer makes no sense. In short, the answer is bullshit!

Seriously. Go read the article.

The Octopus argument is ridiculous for a number of reasons. First, octopuses are actually quite intelligent and, if we follow her logic, the octopus is smart enough to operate the communication hardware, it demonstrates an amazing level of understanding of a great many things. Go, octopus!

I can chat and discuss a great many topics with various levels of knowledge but there are limits to my knowledge, regardless of how well educated I am. So, again following her argument, I'm having great discussions with people about this and that, but eventually, someone asks me a question about what to do to repair a burst blood vessel in the brain, and I take a shot at an answer, have I now been exposed as a fraud because up until now, I managed to convince people of how well educated I was otherwise?

A human can "fake it" in a thousand different ways, and yes, some of those are bullshit, but what exactly is the complaint? That AI is now entering into the realm of what humans on planet Earth do every single day?

Humans will also infer knowledge, without trying to bullshit people, simply because it makes sense to them that if this thing works this way and that thing over there, being very similar to the first thing, has a problem, the solution must therefore be the same as when thing one broke down. That's not bullshitting. That's being reasonably confident that the solution is probably the same.

One of the classic tests to decide whether a chatbot actually understands language is the Winograd test, or more accurately, the Winograd schema challenge (Hector Levesque, UofT). This is considered a hardcore test of a computer’s ability to understand natural language. GPT-4 passed the Winograd test with an accuracy of 94.4%. Compare that to humans who, in one test at least, scored at the 92% level. If you want to get cheeky, this means that GPT-4, at least, can understand natural language better than most humans.

For anyone who is either deeply interested in reading research papers, or you're having trouble falling asleep, there's a paper called, "Establishing a human baseline for the Wingrad Schema Challenge" by David C. Bender at Indiana University Bloomington. You can read it here.

Should you want to get cheeky, this means that GPT-4, at least, can understand natural language better than most humans.

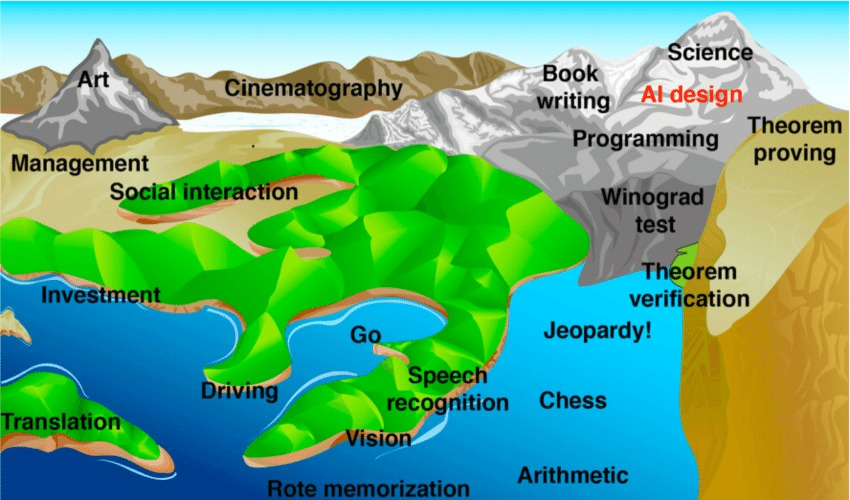

Of course, people will argue that AI will not be truly intelligent until it passes this test or that, whether it can beat someone at Chess, or Go, or the above Winograd test. This idea is well represented by Hans Moravec's illustration of the rising tide of the AI capacity, as seen below. This is at least 6 years old now, and the tide has risen sharply.

I'm a little obsessed with the question of consciousness, but much of what I think on these topics has more to do with how people understand these systems. I feel like it's okay to agree that, yes, these machines are actually capable of a kind of reasoning that goes beyond merely "regurgitating text" as some would say. There's probably more at work here than "autocorrect on steroids". Some of these systems display a kind of reasoning that places them above just prediction engines, and I think that opens up a host of questions we need to confront.

Again, I'm not saying they're conscious, but they might be intelligent, and they might, on some level at least, think (whatever that means).

Feel free to jump in and join in the discussion. You can also feel free to tell me that I'm out to lunch and explain why. :-)

Until next time.